CLRS - Designing Algorithms

Algorithm

CLRS book, Ch 2

Algorithm Correctness

- For any input instance, it halts with the correct (expected) output

Look at the Pseudocode of InsertionSort(A):

The numbers that we wish to sort are also known as the keys. Although conceptually we are sorting a sequence, the input comes to us in the form of an array with n elements.

1 | for j=2 to A.length |

^1-base index is used here

C++ implementation of Insertion sort

1 |

|

How do we prove the Algorithm Correctness?

Loop invariants

One way to show the correctness => Loop Invariants:

We use loop invariants to help us understand why an algorithm is correct. We must show three things about a loop invariant:

- A loop invariant that is:

- True prior to the first iteration of the loop (initialization)

- before the first iteration, the loop invariant has to be true

- If it’s true before an iteration of the loop, it remains true before the next iteration (maintenance)

- showing that each iteration maintains the loop invariant

- When the loop terminates the invariant gives us a useful property that helps show that the algorithm is correct (termination).

- This is the step where we will prove the correctness of the algorithm

- True prior to the first iteration of the loop (initialization)

A loop invariant is an assertion that is placed at the top of a loop and that must hold true every time the computation returns to the top of the loop.

We need Loop invariants because we need to ensure that the loop terminates. If the loop invariant is always true and the loop is designed to terminate when the invariant is no longer true, then we know that the loop will eventually terminate.

Example: Verify Algorithm Correctness of Insertion Sort

1 | for j=2 to A.length |

^1-base index is used here

Loop Invariant in this case: Sub-array[1 to j-1] is always sorted.

Initialization: Before the first iteration j=2. So sub-array [1:1] is the array to be tested. As it has only one element so it is sorted. Thus loop invariant is satisfied.

Maintenance: Showing that each iteration maintains the loop invariant that sub-array [1 to j-1] is sorted. This can be easily verified by checking the loop invariant after each iteration. In this case it is satisfied.

Termination: Finally, we examine what happens when the loop terminates. The condition causing the for loop to terminate is that j > A.length = n. Because each loop iteration increases j by 1, we must have j = n + 1 at that time. Substituting n + 1 for j in the wording of loop invariant, we have that the subarray A[1…n] in sorted order. Observing that the subarray A[1…n] is the entire array, we conclude that the entire array is sorted. Hence, the algorithm is correct.

Algorithm Efficiency

- Efficiency includes: Memory, Communication bandwidth, Computer hardware (e.g. disk)

- The most important Efficiency is computational time

Example efficiency of InsertionSort

For the moment, let us adopt the following view. A constant amount of time is required to execute each line of our pseudocode. A constant amount of time is required to execute each line of our pseudocode. One line may take a different amount of time than another line, but we shall assume that each execution of the ith line takes time , where is a constant.

A.length = n

The running time of the algorithm is the sum of running times for each statement executed

In best case, array is already sorted when fit into the sorting. In that case for .

We can express this best case running time as for constants and that depend on the statement costs ; it is thus a linear function of .

In worst case, the array is in reverse sorted order. In that case for .

given that and

We can express this worst case running time as for constants and that depend on the statement costs ; it is thus a quadratic function of .

Challenges

- Detailed analysis requires:

- Define instructions in RAM model

- Calculate cost for each instruction

- Add up the costs of the instructions used in an algorithm

How can we have a simple way indicating algorithm’s resource requirements, without getting into details?

Algorithm Analysis

In analyzing algorithms we use two effective techniques

- Worst-case analysis

- Asymptotic notations

Worst-Case Analysis (WCA)

The worst-case running time of an algorithm gives us an upper bound on the running time for any input. Knowing it provides a guarantee that the algorithm will never take any longer. We need not make some educated guess about the running time and hope that it never gets much worse.

Worst-case running time: longest running time for any input size of n

- Upper-bound for any input

- For some algorithms, occurs fairly often (e.g. searching in a database)

- Average case is often roughly as bad as the worst case

Asymphtotic Notations

CLRS book, Ch 3

When we look at input sizes large enough to make only the order of growth of the running time relevant, we are studying the asymptotic efficiency of algorithms. That is, we are concerned with how the running time of an algorithm increases with the size of the input in the limit, as the size of the input increases without bound. Usually, an algorithm that is asymptotically more efficient will be the best choice for all but very small inputs.

-

A concise way to show a set of functions (running time, space, etc.)

-

Why?

- To describe the running time (or other characteristics) of the algorithms expressed as functions of

-

Which running time:

- Sometimes worst-case

- Often no matter what the input (asymptotic notation is useful)

-

For all notations, every member of is asymptotically non-negative ( is non-negative for sufficiently large )

Asymptotic notation is useful because it allows us to compare the efficiency of different algorithms without getting bogged down in the details of their implementations. We can simply look at the asymptotic growth rates of their running times or space requirements to get a sense of how they will perform on large inputs.

notation (Theta notation)

This notation is used to describe both upper and lower bounds on the growth rate of a function. For example, if we say that the running time of an algorithm is Theta(n), we mean that the running time grows linearly with the input size.

- belongs to , for sufficiently large , it is sandwiched between and

- Asymptotically tight bound

Big O notation

This notation is used to describe an upper bound on the growth rate of a function. For example, if we say that the running time of an algorithm is O(n), we mean that the running time grows no faster than linearly with the input size.

- An asymptotic upper-bound

In the literature, we sometimes find O-notation informally describing asymptotically tight bounds

Ω notation (Omega notation)

This notation is used to describe a lower bound on the growth rate of a function. For example, if we say that the running time of an algorithm is Omega(n), we mean that the running time grows at least as fast as linearly with the input size.

- An asymptotic lower-bound

Asymptotic Notations Relations

Theorem: for any two functions and , we have:

if and only if and

(i.e., )

Analogy with real numbers:

- is like

- is like

- is like

Example: Prove

To do so, we must determine positive 2 constants , , and such that

- for all , Dividing by yields

- We can make the right-hand inequality hold for any value of by choosing any constant . Likewise, we can make the left-hand inequality hold for any value of by choosing any constant .

- By choosing , we can verify that .

- Certainly, other choices for the constants exist, but the important thing is that some choice exists.

- a different function would usually require different constants.

Designing Algorithms

Divide-And-Conquer

Recursive in structure

They break the problem into several subproblems that are similar to the original prob- lem but smaller in size, solve the subproblems recursively, and then combine these solutions to create a solution to the original problem.

- Divide: Break the problem to similar subproblems (smaller in size)

- Conquer: straightforwardly solve the subproblems (typically in a recursive manner)

- Combine: Combine the solutions to find the solution for the whole problem

The merge sort algorithm closely follows the divide-and-conquer paradigm.

Take a divide-and-conquer approach for merge sort:

- Divide: Divide the n-element sequence to be sorted into two subsequences of elements each

- Conquer: Sort the two subsequences recursively using merge sort

- Combine: Merge the two sorted subsequences to produce the sorted answer

C++ implementation of Mergesort

1 |

|

Merge Sort Correctness

- Loop invariant:

- At the start of the for loop for merging L and R, the subarray A[p…k-1] contains the k-p smallest elements of L[1…n1+1] and R[1…n2+1], in sorted order. Moreover, L[i] and R[j] are the smallest elements of their arrays that have not been copied back into A.

- Initialization: k=p

- Maintenance: for both conditions L[i] <= R[j] true and false

- Termination: k=r+1

Analyzing recursive (divide-and-conquer) algorithms

When an algorithm contains a recursive call to itself, we can often describe its running time by a recurrence equation or recurrence, which describes the overall running time on a problem of size n in terms of the running time on smaller inputs. We can then use mathematical tools to solve the recurrence and provide bounds on the performance of the algorithm.

- Recurrence equation: overall running time on a problem of size in terms of the running time on smaller inputs

- Mathematical tools to solve the recurrence and find bounds on algorithm’s performance

A recurrence for the running time of a divide-and-conquer algorithm falls out from the three steps of the basic paradigm.

We let be the running time on a problem of size .

- If the problem size is small enough, say for some constant , the straightforward solution takes constant time which we can write as .

- Suppose that our division of the problem yields subproblems, each of which is the size of the original. It takes to solve one subproblem. So to solve subproblems.

- we take time to divide the problem into subproblems

- we take time to combine the solutions to the subproblems into the solution to the original problem

For merge sort:

- Divide: The divide step just computes the middle of the subarray, which takes constant time. Thus, .

- Conquer: We recursively solve two subproblems, each of size , which contributes to the running time.

- Combine: We have already noted that the MERGE procedure on an n-element subarray takes time . Thus .

In the CLRS book, Ch 4, we shall see the “master theorem,” which we can use to show that is Because the logarithm function grows more slowly than any linear function, for large enough inputs, merge sort, with its running time, outperforms insertion sort, whose running time is , in the worst case.

Master Theorem

The Master Theorem is a mathematical formula used to analyze the time complexity of recursive algorithms. It provides a quick way to determine the time complexity of a recursive algorithm in terms of its input size and the complexity of its subproblems.

The master method provides bounds for recurrences of the form:

- where:

- T(n) is the running time of the algorithm for an input size of n.

- a is the number of subproblems the algorithm generates at each level of recursion.

- n/b is the size of each subproblem.

- f(n) is the time complexity of the work done outside of the recursive calls.

- , and is asymptotically positive

Theorem:

- Let be constants, let be a function, and let be defined on the nonnegative integers by the recurrence

- the form characterizes a divide-and-conquer algorithm that creates subproblems, each of which is the size of the original problem, and in which the divide and combine steps together take time.

The Master Theorem has 3 cases, depending on the relationship between the complexity of the subproblems and the complexity of the work done outside of the recursive calls:

-

Then has the following asymptotic bounds

-

If for some constant ,

then

-

If ,

then

-

If for some constant , and if for some constant and all sufficiently large ,

then

-

Example question for Master Theorem:

-

A divide-and-conquer algorithm has the complexity . This algorithm is of:

a)

b)

c)

d)

a = 2 , b = 4, f(n) =

=

Since is clearly , so we need add constant

In that case, it is case 3:

Then

Therefore the answer is a).

-

is of (select one)

=

In that case, it is case 2.

then

Dynamic Programming

Dynamic programming, like the divide-and-conquer method, solves problems by combining the solutions to subproblems.

-

How the divide-and-conquer method solves a problem?

- divide-and-conquer algorithms partition the problem into disjoint subprob- lems, solve the subproblems recursively, and then combine their solutions to solve the original problem.

-

What if sub-problems overlap (share sub-subproblems)?

- Dynamic programming (DP) saves the repeated solutions!

- Dynamic programming is typically applied to optimization problems:

- Has many solutions, each has a value, find the optimal solution (maximum or minimum)

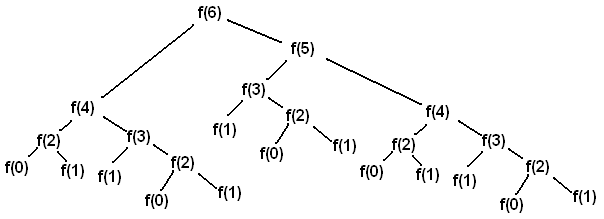

Example: Fib(n)

- Dynamic programming (DP) saves the repeated solution

- Reusing the f(4) tree

1 |

|

1 |

|

When use Dynamic Programming ?

Optimal substructure

- Optimal solutions to a problem incorporate optimal solutions to related subproblems, which we may solve independently.

- Choice depends on solutions to subproblems

Overlapping subproblems

Steps of Dynamic Programming

- Show that a solution to the problem consists of making a choice leaving one or more subproblems to be solved

- Assume that for a given problem, you are given the choice that leads to an optimal solution

- Given this choice, determine which subproblems ensue and how to best characterize the resulting space of subproblems

- Show that the solutions to the subproblems used within an optimal solution to the problem must themselves be optimal by using a “cut- and-paste” technique.

Dynamic Programming Example

Activity-Selection Problem (ASP)

- A set of activities

- Each activity has a start time and a finish time , , takes place (Suppose monotonically ordered finish time)

- and are mutually compatible if their intervals do not overlap

- Select a maximum-size subset of mutually compatible activities

- Objective: Find the maximum number of non-conflicting jobs

Using Dynamic Programming to solve Activity-Selection Problem (ASP):

- Let be a set of the activities start after the activity and finish before

- Let be the max set of mutually compatible activities in

- Then for each activity, let say, , we have two subproblems: and .

- and

Greedy Approach

- Obtains an optimal solution to a problem by making a sequence of locally optimum choices

- Does it produce always an optimum solution?

- Two requirements:

- Greedy-choice property

- Optimal substructure

Key: If you find the optimal subproblem, we can use it to find the optimal problem

Greedy Approach - Greedy-Choice Property

- Greedy-Choice Property (GCP):

- we can assemble a globally optimal solution by making locally optimal (greedy) choices

- Differing from dynamic-programming:

- Choice depends on solutions to subproblems (bottom-up)

- May depend on choices so far, but not on any future choices => making choice before solving subproblems! (top-down)

- GCP should be demonstrated for a problem (via a theorem and its proof)

- If input is preprocessed properly, the greedy approach is efficient!

Steps of Greedy Approach

- Cast the optimization problem as one in which we make a choice and are left with one subproblem to solve

- Prove that there is always an optimal solution to the original problem that makes the greedy choice, so that the greedy choice is always safe

- Demonstrate optimal substructure by showing that, having made the greedy choice, what remains is a subproblem with the property that if we combine an optimal solution to the subproblem with the greedy choice we have made, we arrive at an optimal solution to the original problem

Using **Greedy Approach ** to solve Activity-Selection Problem (ASP):

- Consider any nonempty subproblem , and let be an activity in with the earliest finish time. Then is included in some maximum-size subset of mutually compatible activities of

To do it:

- Initially sort the activities in increasing order of their finish times and create a set to store the selected activities and initialize it with the first activity.

- if the first activity is the optimal solution for a subproblem, then an optimal solution to the original problem consists the first activity, and all the activities in an optimal solution to the remaining problem!

- Then from the second activity onward, include the activity in the activities list if the activity’s start time is greater or equal to the finish time of the last selected activity. Repeat this for each activity involved.

Alternatively, we can select the last compatible activity that starts the latest.

Demo:

Hard Problems

Complexity Classes

What is an NP-complete in computer science?

NP is the set of all decision problems (questions with a yes-or-no answer) for which the ‘yes’-answers can be verified in polynomial time ( where is the problem size, and is a constant) by a deterministic Turing machine. Polynomial time is sometimes used as the definition of fast or quickly.

- : verifiable in polynomial time

- i.e., given a solution we can say in polynomial time that the solution to the problem is correct

- (example: vertex-cover)

- i.e., given a solution we can say in polynomial time that the solution to the problem is correct

P is the set of all decision problems which can be solved in polynomial time by a deterministic Turing machine.

-

: solvable in polynomial time

- i.e., of for some constant and is the size of input

- (example: sorting, shortest-path)

- i.e., of for some constant and is the size of input

-

Observation:

- Since they can be solved in polynomial time, they can also be verified in polynomial time. Therefore P is a subset of NP.

-

: it is and as “hard” as any other problem,

- i.e., solving any - Complete problem in polynomial time means every - Complete problem can be solved in polynomial time!

NP − Completeness

A problem x that is in NP is also in NP-Complete if and only if every other problem in NP can be quickly (ie. in polynomial time) transformed into x.

In other words:

- x is in NP, and

- Every problem in NP is reducible to x

So, what makes NP-Complete so interesting is that if any one of the NP-Complete problems was to be solved quickly, then all NP problems can be solved quickly.

To show a problem that is NP-Complete,

- Perform Reduction to show NP − Completeness

Practice Questions

- For any real constants and

True

- For any value of , an algorithm with runtime runs faster than an algorithm with runtime

False

- This statement: "The running time of insertion sort is at least " is (select one):

- meaningless

- true for some cases

- true in all cases

- false

meaningless. Big O is a upper bound. We only care tight bound.

- The function is of (select one):

- Which one of the following improve the best-case running time of a sort algorithm? (Select all)

Check if the array is sorted do nothing

Check if only the first two elements are not in-place swap them

Check if only the last two elements are not in-place swap them

All are correct and they do improve the running time.

Check if the array is sorted do nothing

Check if only the first two elements are not in-place swap them

Check if only the last two elements are not in-place swap them

- Suppose an implementation of insertion sort with runtime and an implementation of merge sort with runtime . Then (Select one):

Merge-sort implementation is faster than that of the inserstion sort

Insertion sort implementation is faster than that of the merge-sort

Insertion sort is faster only for

Merge sort is faster only for

Insertion sort is faster only for

- is of (select one)

Master Theory problem

- Which problem has the suboptimal substructure? (select all)

Finding the shortest path between two nodes in an undirected graph

Finding the longest path between two nodes in an undirected graph

Finding the maximum number of mutually compatible activities within a period

All problem has the suboptimal substructure.

Finding the shortest path between two nodes in an undirected graph

Finding the longest path between two nodes in an undirected graph

Finding the maximum number of mutually compatible activities within a period