Linear Regression vs Logistic Regression

Generally

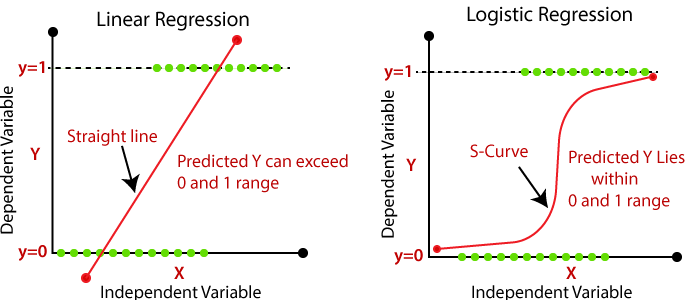

What is the difference between linear regression and logistic regression?

Linear Regression

- In linear regression, the outcome (dependent variable) is continuous. It can have any one of an infinite number of possible values.

Linear regression is meant to resolve the problem of predicting/estimating the output value for a given element (say ). The result of the prediction is a continuous function where the values may be positive or negative. The goal is to be able to fit a model to this data set so you are able to predict that output for new different/never seen elements.

When will it be used?

Used to produce continuous outcome.

- Linear regression is considered as a regresson algorithm (continous output)

Linear regression is used when your response variable is continuous. For instance, weight, height, number of hours, etc.

Can Linear regression output as probabilities?

No. Since the output of Linear regression can be < 0 and > 1 whereas probability can not.

Therefore for probabilities output, we could use logistic regression.

Equation

Linear regression can be solved in two different ways:

- Normal equation (direct way to solve the problem)

- Gradient descent (Iterative approach)

For degree one, y equals to ax plus b, we have only 2 model parameters, there is no advantage to use gradient descent.

Linear regression gives an equation which is of the form

The is the degree 1 form.

In general linear regression could be used to fit more complex models (using higher polynomial degrees).

Polynomial degree

In higher polynomial degree:

More info: Polynomial regression

Metric: Training error

MSE (Mean Square Error)

Where

MAE (Mean Absolute Error)

Where

Logistic Regression

- In logistic regression, the outcome (dependent variable) has only a limited number of possible values.

logistic regression is meant to resolve classification problems where given an element you have to classify the same in N categories. Typical examples are, for example, given a mail to classify it as spam or not, or given a vehicle find to which category it belongs (car, truck, van, etc …). That’s basically the output is a finite set of discrete values.

When will it be used?

Used to produce probability.

- Logistic regression is considered as a classifier algorithm (discrete output)

- In order words, Logistic regression is a classification technique. (although it called “regression”, because it’s underlying technique is quite the same as Linear Regression.)

Logistic regression is used when the response variable is categorical in nature. For instance, yes/no, true/false, red/green/blue, 1st/2nd/3rd/4th, etc.

You have basically 2 types of logistic regression Binary Logistic Regression (Yes/No, Approved/Disapproved) or Multi-class Logistic regression (Low/Medium/High, digits from 0-9 etc)

Equation

In logistic regression, the outcome (dependent variable) has only a limited number of possible values.

Logistic regression problems could be resolved only by using Gradient descent.

The formulation in general is very similar to linear regression the only difference is the usage of different hypothesis function. In linear regression the hypothesis has the form:

is the input vector. In logistic regression the hypothesis function is different:

- it maps any value to the range [0,1]

- which is appropiate to handle propababilities during the classification

Metric: Accuracy

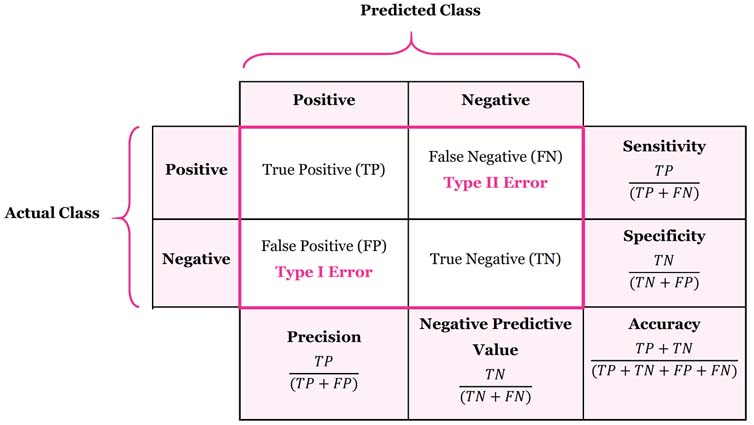

- Often used Confusion Matrix as metric.

True Positive (TP): correctly classified as Positive

False positives (FP): falsely classified as Positive (So it is actually Negative but misclassified)

True negatives (TN): correctly classified as Negative

False negatives (FN): falsely classified as Negative (So it is actually Positive but misclassified)

Confusion Metrics:

- Accuracy (all correct / all) =

- Misclassification (all incorrect / all) =

- Precision (true positives / predicted positives) =

- Sensitivity aka Recall (true positives / all actual positives) =

- Specificity (true negatives / all actual negatives) =