Generative Adversarial Networks - Basically

Generative Adversarial Networks (GANs)

Generative Adversarial Networks (GANs) are a powerful type of neural network used for unsupervised learning. It involves automatically discovering and learning the regularities or patterns in input data in such a way that the model can be used to generate or output new examples that plausibly could have been drawn from the original dataset. GANs typically work with image data and use Convolutional Neural Networks, or CNNs, as the generator and discriminator models.

GANs made up of two models competing with each other.

The two models are called Generator and Discriminator.

Info From Machine Learning Mastery:

The GAN architecture was first described in the 2014 paper by Ian Goodfellow, et al. titled “Generative Adversarial Networks.”

A standardized approach called Deep Convolutional Generative Adversarial Networks, or DCGAN, that led to more stable models was later formalized by Alec Radford, et al. in the 2015 paper titled “Unsupervised Representation Learning with Deep Convolutional Generative Adversarial Networks“.

What can GANs do?

- Image Manipulation

- Image Generation

Some Practical Examples

- Prediction of Next Frame in a Video

- Text to Image Generation

- Image to Image Translation (CycleGAN)

- Transforming Speech (CycleGAN)

- Enhancing the Resolution of an Image

- Creating Art

GANs - Basically

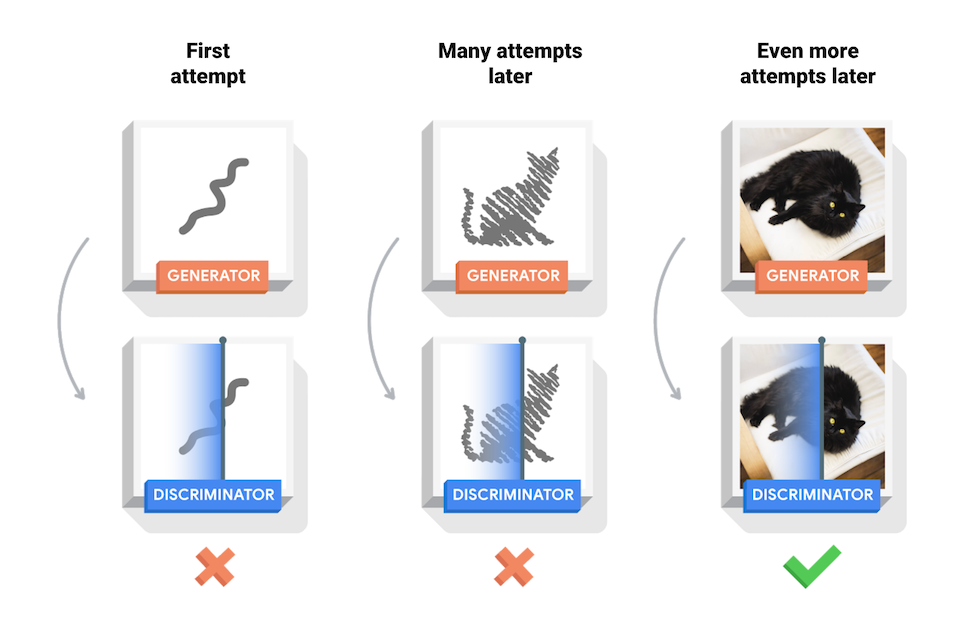

GANs consists of a pair of neural networks that fight with each other.

One is called Generator and the other one is called Discriminator.

What Means by “Fight with each other”?

In short words, The Generator will keep generating samples, and then samples will be sent to the Discriminator. Discriminator has to classify whether the sample is real or generated. Generator needs to fool the Discriminator to classify the generated sample as a real sample. The goal of the generator is to generate perfect replicas from the input domain every time, and the discriminator cannot tell the difference and predicts “unsure” (e.g. 50% for real and fake) in every case.

We can think of the generator as being like a counterfeiter, trying to make fake money, and the discriminator as being like police, trying to allow legitimate money and catch counterfeit money. To succeed in this game, the counterfeiter must learn to make money that is indistinguishable from genuine money, and the generator network must learn to create samples that are drawn from the same distribution as the training data.

Generative modeling is an unsupervised learning problem, Although a clever property of the GAN architecture is that the training of the generative model is framed as a supervised learning problem.

The Two Models of GAN

The two models, the generator and discriminator, are trained together. The generator generates a batch of samples, and these, along with real examples from the domain, are provided to the discriminator and classified as real or fake.

The Generator

The Generator is responsible for Generation.

The Generator Model:

- takes a fixed-length random vector as input

- and generate a multidimensional vector space after training

- forming a compressed representation of the data distribution as output

This vector space is referred to as a latent space, or a vector space comprised of latent variables. Latent variables, or hidden variables, are those variables that are important for a domain but are not directly observable.

- A latent variable is a random variable that we cannot observe directly.

The Discriminator

The Discriminator is responsible for Classification.

The Discriminator Model:

- Takes an sample as input (From real or generated)

- Produce a binary class label of real or fake(generated) as output prediction

- The discriminator model is trained by Identifying the Real and Fake datas.

- After the training process, the discriminator model is discarded as we are only interested in the generator.

“Simultaneously, the generator attempts to fool the classifier into believing its samples are real. At convergence, the generator’s samples are indistinguishable from real data, and the discriminator outputs 1/2 everywhere. The discriminator may then be discarded.”

Conditional GANs

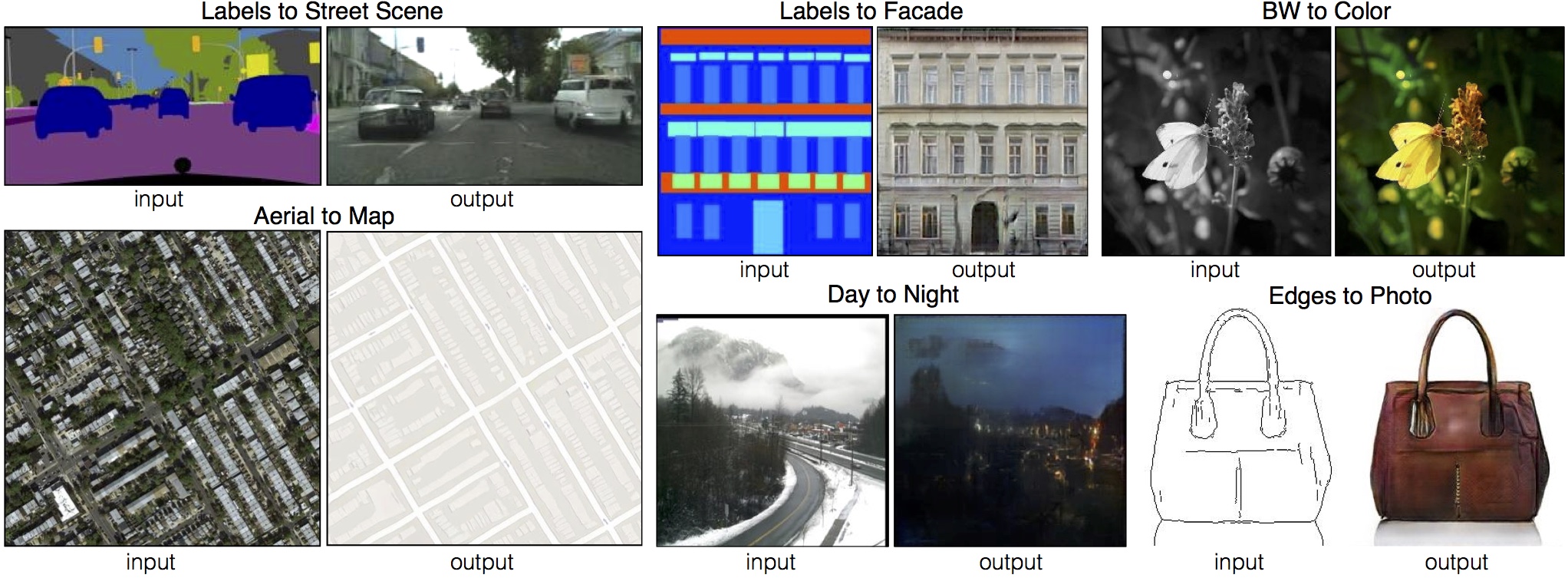

An important extension to the GAN is in their use for conditionally generating an output.

Generative adversarial nets can be extended to a conditional model if both the generator and discriminator are conditioned on some extra information y. y could be any kind of auxiliary information, such as class labels or data from other modalities. We can perform the conditioning by feeding y into the both the discriminator and generator as [an] additional input layer.

The generative model can be trained to generate new examples from the input domain, where the input, the random vector from the latent space, is provided with (conditioned by) some additional input.

The additional input could be a class value, such as male or female in the generation of photographs of people. Taken one step further, the GAN models can be conditioned on an example from the domain, such as an image. This allows for applications of GANs such as text-to-image translation, or image-to-image translation. This allows for some of the more impressive applications of GANs, such as style transfer, photo colorization, transforming photos from summer to winter or day to night, and so on.

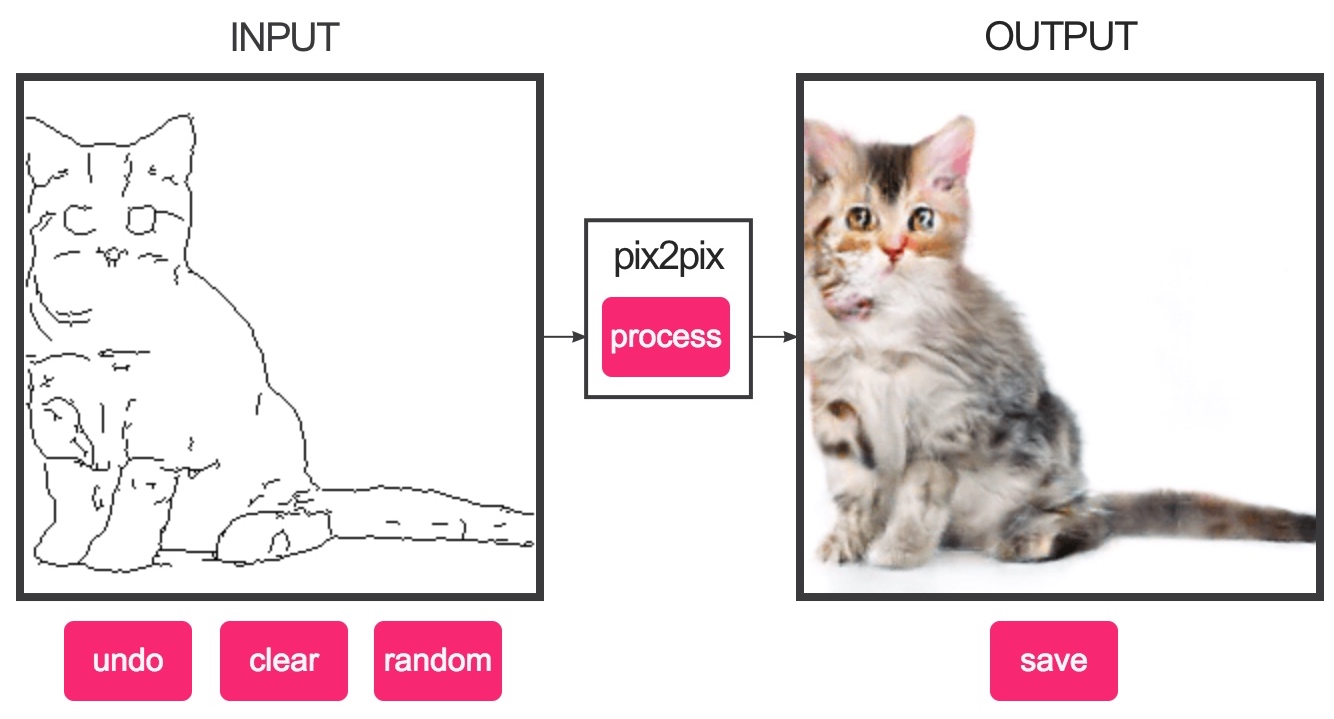

Generating your own image

Check this awesome application with GANs.

Application of GANs

There are many applications of Generative Adversarial Networks (GANs).

Act as Data Augmentation

When we do not have enough images in the dataset to train the model properly, we can use GANs to generate different versions of existing images. For example, adding smiling / glasses to a face.

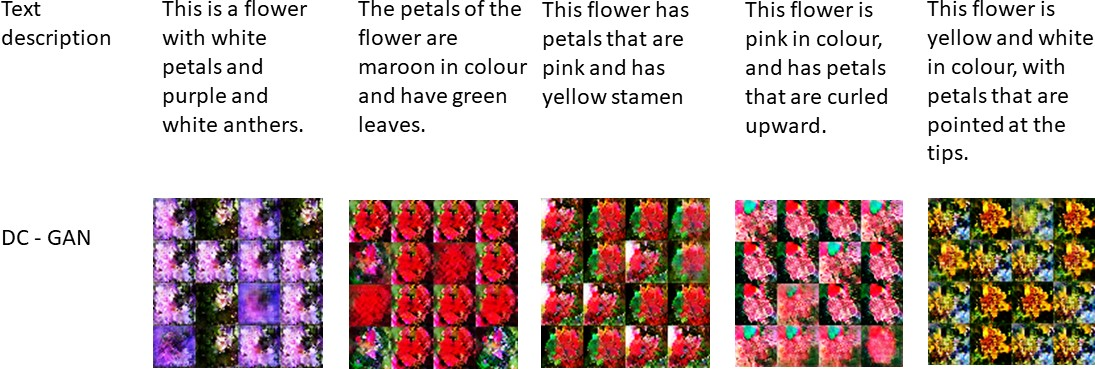

Text-to-Image Translation

This GAN can produce photographs of flowers by using text.

Image to Image Translation

Check this Image-to-Image Translation with Conditional Adversarial Nets

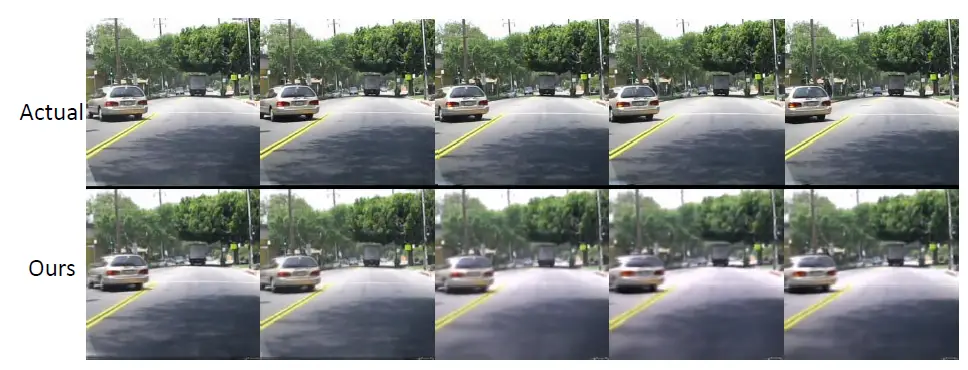

Video / Frame Prediction

Predicting what will happen in next video frame

Some Interesting Paper of GANs

- CA-GAN: Weakly Supervised Color Aware GAN for Controllable Makeup Transfer

- BeautyGAN: Instance-level Facial Makeup Transfer with Deep Generative Adversarial Network

- LipGAN: Generate realistic talking faces for any human speech and face identity

Reference

Video: What are Generative Adversarial Networks (GANs) and how do they work?

Video: A Friendly Introduction to Generative Adversarial Networks (GANs)

A Gentle Introduction to Generative Adversarial Networks (GANs)

GAN Lab: Play with Generative Adversarial Networks

GAN Dissection: Visualizing and Understanding Generative Adversarial Networks

Video: Deep Generative Modeling | MIT 6.S191

Tensorflow - Deep Convolutional Generative Adversarial Network

11 Mind Blowing Applications of Generative Adversarial Networks (GANs)