Getting RGB and Depth image from Azure Kinect in ROS

Azure-Kinect + ROS Guide

Pre-requisites:

- Already Setup ROS + Azure-Kinect

- Already Setup Azure-Kinect ROS driver

What sensor does kinect have?

- IR Camera

- Depth Camera

- RGB Camera

- IMU

- Microphone array

In this article we will focus on how to mess with the camera.

IR Camera

- IR camera is basically the grayscale subject to temperture.

- Usually used on human

RGB Camera

- the Standard RGB camera.

- RGB Image is a 3D array, 3 channels of a 2D array.

Depth Camera

- Depth is used to measure the distance.

- You need to configure the max depth

- Depth Image is a 2D array, each value is the distance of that pixel.

This image demonstrates the camera’s field-of-view as seen from the front at a distance of 2000 mm.

When depth is in NFOV mode, the RGB camera has better pixel overlap in 4:3 than 16:9 resolutions.

What is the use of Azure-Kinect ROS driver?

With Azure-Kinect ROS driver, basically it act as publishers that publish different nodes. All we need to do is to catch the message in corresponding topic using a subscriber.

To deal with ROS image

To carry image processing, We can use opencv. First we need to convert ROS image to OpenCV image.

Some useful API

- sensor_msgs - This package defines messages for commonly used sensors, including cameras and scanning laser rangefinders.

- cv_bridge - This package converts between ROS Image messages and OpenCV images.

- image_transport (C++ only) - This package provides transparent support for transporting images in low-bandwidth compressed formats.

- message_filters - Used when you want to have multiple subscribers.

cv_bridge can selectively convert color and depth information. In order to use the specified feature encoding, there is a centralized coding form:

- mono8: CV_8UC1, grayscale image

- mono16: CV_16UC1, 16-bit grayscale image

- bgr8: CV_8UC3 with color information and the order of colors is BGR order

- rgb8: CV_8UC3 with color information and the order of colors is RGB order

- bgra8: CV_8UC4, BGR color image with alpha channel

- rgba8: CV_8UC4, CV, RGB color image with alpha channel

Create a New ROS Package in your Workspace

Syntax:

1 | catkin_create_pkg <pkg name> [dependencies] |

Go to <your workspace>/src :

1 | catkin_create_pkg kinect_handler image_transport cv_bridge sensor_msgs rospy roscpp std_msgs |

Configure launch file

Configuring Kinect Through Included Launch file

We won’t want to mess with the ROS driver (driver.launch) file. That’s why we make our own launch file.

Your custom launch file:

1 | <launch> |

Explaination of some important args:

depth_enabled- Enable or disable the depth cameradepth_mode- Set the depth camera mode, which affects FOV, depth range, and camera resolution. See Azure Kinect documentation for full details.

- Valid options:

- NFOV_UNBINNED

- NFOV_2X2BINNED

- WFOV_UNBINNED

- WFOV_2X2BINNED

- PASSIVE_IR

color_enabled- Enable or disable the color cameracolor_format- The format of RGB camera.

- Valid options: bgra, jpeg

color_resolution- Resolution at which to run the color camera

- Valid options: 720P, 1080P, 1440P, 1536P, 2160P, 3072P

fps- FPS to run both cameras at. See Azure Kinect documentation for full details.

- Valid options: 5, 15, and 30

point_cloud- Generate a point cloud from depth data. Requires depth_enabledrgb_point_cloud- Colorize the point cloud using the RBG camera. Requires color_enabled and depth_enabledpoint_cloud_in_depth_frame- Whether the RGB pointcloud is rendered in the depth frame (true) or RGB frame (false). Will either match the resolution of the depth camera (true) or the RGB camera (false).required- Argument which specified if the entire launch file should terminate if the node diessensor_sn- Sensor serial number. If none provided, the first sensor will be selectedFurther details can be checked in the ROS drivers’ launch file.

Writing a Subscriber to get rgb image

Reference : Working With ROS and OpenCV in ROS Noetic

C++

1 |

|

Note for C++ you need to edit the package level

CMakeLists.txt.

This code goes under the find_package(catkin …) block.

1 | find_package(OpenCV) |

This code goes under the include_directories() block.

1 | include_directories(${OpenCV_INCLUDE_DIRS}) |

Lets say we have a file in src called webcam_pub_cpp.cpp.

Add these to the bottom.

1 | add_executable(webcam_pub_cpp src/webcam_pub_cpp.cpp) |

More info about CMakeLists.txt in ROS

Remember to catkin_make.

Python

1 | #!/usr/bin/env python |

Note for python script you only need to mark the script as executable. You don’t even need to do catkin_make .

To make the file an executable:

1 | chmod +x <your program>.py |

Using a depth camera in ROS to get distance

If you put 32FC1 the values of the pixel of the image should be already the distance in meters.

1 | #!/usr/bin/env python |

Depth Pro-processing

https://github.com/microsoft/Azure_Kinect_ROS_Driver/issues/170

Writing Multiple Subscribers to get rgb and depth image

Adding message_filters dependency to package

Simply edit the <your package>/package.xml

How to add a system dependency to a package

1 | <build_depend>message_filters</build_depend> |

Code Implementation

1 | #!/usr/bin/env python |

Writing a Simple Object Detector

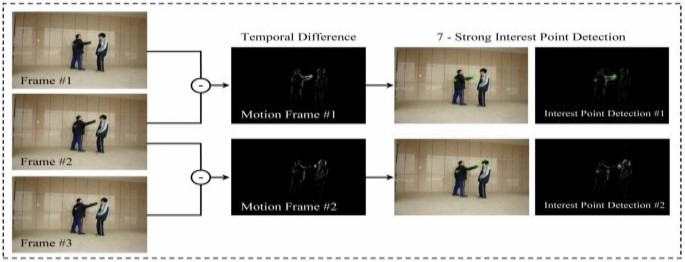

Reference : CSDN: 運動目標檢測的四種方法

Common ways to track a moving object:

- Temporal Difference (連續幀間差分法)

- 2-Frame Difference

- 3-Frame Difference

- Background Subtraction (背景差分法)

Implementations of Simple Object Detector

Temporal Difference. Basically using 2 Images in different frames (usually and frame). Subtraction of and would yield a difference image . Then apply Threshold to the difference image to get the tracking mask. Using a 3-Frame Difference (, , ) would yield a better result. Usually this algorithm is used with other algorithm.

Background Subtraction. Basically using 2 Images of Background frame and Current Frame. Apply Gaussian blue to both frames, then do a Subtraction would yield a difference image . Then apply Threshold to the difference image to get the tracking mask. Commonly used in real-time tracking because only require less computation. The only requirement is the camera must be static. Everything (including Lights) in the background must be stationary. Only the objects can move.

In Code Implementation of Background Subtraction

You might need to let the camera warm up before capturing the background image.